the rise of wireless charging & its implications

Wireless charging is on the verge of surrounding us in our everyday lives. With the latest and most advanced generation of smartphones featuring this capability natively, the demand is compounding. Manufacturers ranging from furniture suppliers to apparel designers are racing to incorporate it into their products. But its value is misguided as most consumers, who are driving demand, lack even a basic understanding of how the technology works, leaving most unaware of the impact it has on the energy grid and subsequently the environment.

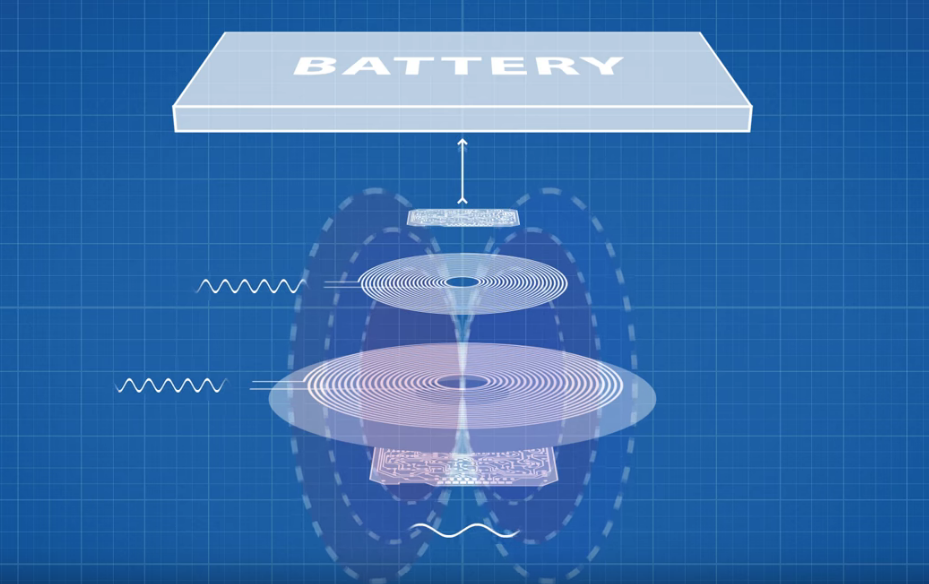

More formally known as inductive coupling, wireless charging is a method of transferring power from one body to another by radiating energy across a small distance (roughly 2-8 mm). This is done utilizing an electromagnetic field. While it offers the minor convenience of not having to plug a device in to charge, this method is not efficient. It’s actually far from it… barely over 50%. What do I mean by ‘efficient’? Think of it as a ratio of what gets used to what is lost. In this technology, when energy is emitted broadly from a transmitter (think of a WiFi router beaming out a signal) only a portion of the energy is absorbed by its target. What is not absorbed bounces around off other objects in the vicinity until it eventually dissipates off into the ether. In fact, Qi (pronounced, “chee”), the consortium that has standardized the technology, has by far the most effective version of wireless inductive charging and even their methodology is limited to 59% efficiency. This means that 41% of the total energy emitted is simply wasted. This is important to note because the device needing to be charged still requires the same amount of energy to function, so we inevitably end up pumping more energy into the process to compensate for what is not absorbed. This presents a conflict when considering the priority we have placed on curbing our energy use and limiting greenhouse gas emissions created during energy production. Obviously, the stuff that comes out of the wall to power your Nespresso and charge your iPad is the same stuff that we burn coal, oil and gas to generate. So, it stands to reason that the more energy our devices require necessitates an increase in the processes that are contributing to climate change and that doing so in the absence of true utility is, well, stupid.

To illustrate the absurdity of wireless charging in a more approachable way, consider the following. Imagine that you are sitting in your favorite cafe about to sip from a glass of water. Most of us would simply lift the glass to our mouths and take a drink. Others might use a straw. Either of these techniques would yield 99.99% of this water into your mouth. Very efficient. Now, let's say someone comes along and says, “hey, we have a new way for you to ingest that water that alleviates the need for you to lift the glass to your mouth, which is a big waste of time. Care to try it?” Being curious you accept. They instruct you to sit, relax and simply open your mouth. The person lifts your glass and throws the water on your face from a few feet away. You are now shocked, wet and a probably remorseful. In this scenario you'd likely end up with, at best, 59% of the water in your mouth and the rest, well, everywhere else. After some reflection, you'd likely conclude that this was not at all a very efficient way of consuming water, especially if you consider that here you are, wet and still thirsty and now you have to go fill up your glass again.

Later, you may further realize that water is a precious resource and that wasting it, quite unnecessarily, is not ideal. Well power and electricity are precious also and for next to zero benefit we find ourselves eager to absorb a technology that wastes almost half the electricity used to charge a device. Its also slower… taking over 2 hours to charge an iPhone… so I have to ask, why are we doing this?

To bring a little more context to bear, let’s scale the increase of a single adoption. If you only charge your phone once a day and there is only one phone per household, this equates to 278 megawatt hours (MWh) of extra electricity generation needed in 1 year... which is about 25 years worth of electricity for the average single home in America. This excludes multiple devices for a single person and multiple people in a single household. Increasing those factors would compound energy consumption.

Research continues on the wireless charging and its application and efficiency rates are climbing. Experimentation at MIT in recent years has cited improved efficiency yields and increased distance over several meters by utilizing resonance coupling, a similar but distinguished method of wireless energy-transfer. Even so, some of this research is over 15 years old and these improvements have yet to make their way into commercial applications. The implication of this is that widespread adoption will occur at the expense of our efforts to conserve energy and reduce increasing rates of production. While I firmly believe that the average person does indeed care about their carbon footprint, they only do so as it is convenient to their general lifestyle choices. The complex mechanics of this technology likely doesn’t even form a blip on the radar of the average consumer’s attention. What do we do about that?

source: Real Engineering